1. Apparently Alexa and its ilk are causing heartburn among legal scholars. How should conversations over-heard by virtual assistants be treated when they are offered as evidence in court? Among the analogies that are being run up the metaphorical flagpole is a comparison with …parrots, as an eavesdropper who can accurately repeats information it overheard but was not expected to disclose. Courts have refused to admit testimony by parrots. In one case, a parrot named Max repeatedly cried out, “Richard, no, no, no!” after the murder of his owner. The defense attorney in the case wanted to have this evidence admitted the accused murder’s name was Gary. The attorney argued, unsuccessfully, that the “testimony” was not hearsay, but rather like a recording device. Despite expert testimony that that breed of parrot had the ability to accurately repeat statements, the evidence was excluded.

In another case, Bud the Parrot, began incessantly repeating, “Don’t fucking

shoot!” after one of his owners shot the other.

2. Portland radio station LIVE 95.5 has been using an artificial intelligence-generated DJ modeled after Ashley Z Elzinga when she isn’t available to host her show. They call the fake radio host “AI Ashley.” The station director said that he doesn’t even think the audience has noticed when the AI takes over. Wait, what? That’s unethical deception.

Ashley, meanwhile, thinks its all wonderful. “Outside of the industry, it’s a lot of tech people who are saying good job and embracing new technology, and they’re excited about this. I mean, this is a brand new thing,” Ashley Z said.

Oh, well, if it’s new, it must be wonderful. She’s happy because the station gave her a raise. She’s a fool. It’s hard to think of too many professions that could more easily be rendered obsolete by artificial intelligence than radio disc jockeys.

3. I vow that if I ever post an essay written by a chatbot, I will inform readers up front—-unless, of course, I’ve been a robot all along….

4. …which reminds me: a college professor at a small college who recently became chair of the English department asked The Ethicist if it would be unethical for him to use ChatGPT to draft the “reports, proposals, assessments and the like”so he would have more time to devote “to students and my own scholarship.” “Is it OK to use ChatGPT to generate drafts of documents like these, which don’t make a claim to creative “genius”? “Do I need to cite ChatGPT in these documents if I use it?” he added.

Kwame Anthony Appiah replied by comparing the use of the A,I. to using a template, and said he saw nothing wrong with what the professor proposed as long as he diligently checked the drafts. he also saw no reason to cite this use of the bot.

I do. At least until the ethics of this use of artificer intelligence is vetted and measured, I think best practice is to always cite it, at least until certain products are assumed to involve A.I. assistance.

On point #4, I would propose this for consideration. The birth of modern computing came about in part because mathematician David Hilbert noted that many mathematical theorems followed formulaic practices, and he wondered if all mathematical theorems could be generated by some systematic process. This led Church and Turing to develop their Lambda Calculus and Turning Machines, which they proved were equivalent, and thus formulated the Church-Turing thesis, that anything that could be computed could be computed on a Turing Machine. They then were able to show that there are mathematical theorems that cannot be computed, answering Hilbert in the negative.

When we consider AI as it stands now, it still operates on conventional computers, which are effectively superfast Turning Machines. Thus there are tasks that AI simply will not be able to do, period. But the question of the computation of rote documents is perhaps something that AI is able to do. In the entire space of possible documents, AI is reasonably capable of deciding whether a document strings together sentences properly, and can even make a reasonable effort at deciding if a document is relatively cohesive. As long as these documents abide by rigorous rules, AI could certainly generate documents and decide if those documents meet those rules. But keep in mind that that is only as effective as the AI’s ability to produce an example document out of the space of all possible documents, and the rules governing the type of document being sought. Loosen the rules too much, and pretty quickly you have an undecideable problem, and you can deliberately make your queries such that the AI will perform arbitrarily poorly.

So the real question is, in what situations do you want to apply AI, because by apply AI you effectively concede the documents being produced are so formulaic that human intelligence is not truly needed? For paperwork that has lots of repetition and follows rigorous patterns, I think that would be acceptable. It wouldn’t be that much different than copying a previous document and brushing up a few details to make it pertinent for the current purpose. For anyone doing anything marginally creative, though, it would be a risk that the AI could outperform you, and what does that say about your work?

And remember, given an infinite amount of time, a thousand monkeys with typewriters could reproduce the entire works of Shakespeare.

#4. It is worse than you think. I suspect a large number of documents the professor is talking about will never see the light of day. There is a vast amount of paperwork that is required to be done and filed. I mean that literally. It is required that the paperwork be done and that it be filed and those are the only requirements. These documents will never be read or used for any purpose, they are just required to exist. I see no problem using ChatGPT for such documents if it gives him time to dedicate time to things that actually matter.

If you want to know an example of such documents, imagine being required to write a 5 page document about the impact adding a lacrosse team to the school will have on your department and an analysis over whether or not the team should be added based on that information. Imagine that the lacrosse team had been approved and a coaching staff hired 2 months BEFORE you were told to write the report. So this report just exists because the administration found out they were required to gather input from their departments about the program as part of the decision making process.

I am very wary of the use of AI, but this is one use I can get behind.

unless, of course, I’ve been a robot all along….

Obviously, people are going to the wrong source to ask such technical questions:

Authentic Idiot

Well, she isn’t wrong; she just used a lot of words to get there.

Re AI and Disc Jockeys. Casey Kasam was apparently resurrected by stations needing to fill airtime cheaply. AI requires no health or retirement benefits and never takes vacations.

Regarding DJs—I am looking forward to the return of Wolfman Jack to the airways….

I am one who does not like doing important reports using forms (fill-in-the-blanks) or boilerplate formats. It is efficient but does not give the feeling of a true accomplishment. I will do first drafts and/or outlines as a framework for something, however. Using AI to build that framework or outline should not require attribution; if AI provides a significant portion of the finished produce, its a different story.

Since the original issue was raised by a professor, maybe introducing the whole thing as an experiment about just how AI might fit in as a tool for assisting a writer. The professor and the class would, as a group, serve as “lab rats” for the greater good. That way no one is led to believe that AI was not involved.

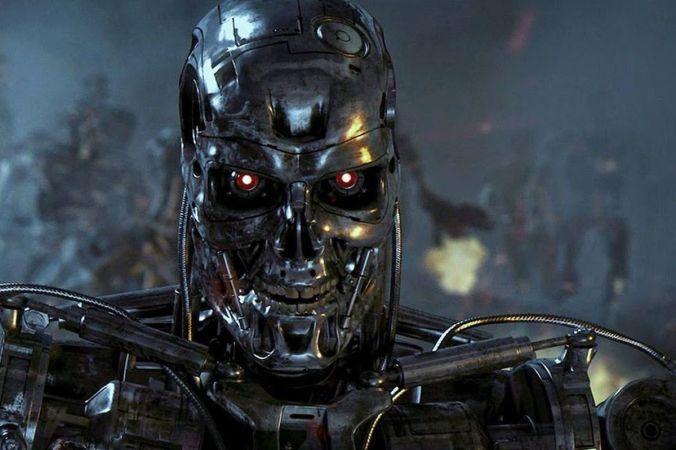

I don’t know why, but “Robocop” and “Pinky and the Brain” came to mind while writing this comment.

I almost mentioned the Wolfman. I always wondered how many Sirius-XM listeners didn’t know he was dead. Same with Casey Kasem.

“ I think best practice is to always cite it”

I find this statement odd since your post about the black infant mortality rate was plagiarized and you didn’t indicate that most of your sentences were lifted almost verbatim from another article.

I checked the article. I was reporting on the analysis presented in the Daily Signal, and linked to that article, which is exactly like disclosing a source. I write several thousand posts a day for the entertainment and enlightenment of readers. My analysis is original, and if another commentator’s points are used, they get links or mentions. On occasion in relaying the substance of another article, I do not sufficiently re-phrase a statement as completely as I should, but it is an innocent mistake. I am not being graded nor do I profit in any way from what I write.

So you are been waiting to spring this “gotcha!” for five days, so you could accuse me of plagiarism. That is not the act of a good faith commenter: if the post bothered you, the ethical response was to alert me that my post was, in your view, too similar to one of its sources.

You’re a troll, Tommy. Get off my blog. No more of your snotty comments—and almost all of them have been snotty as well as hostile, will be accepted here.

And now I’ll go fix that post.

(Which by no possible interpretation was “lifted verbatim”…but never mind.)

Interestingly, TT is the 8th banned commenter of 2023, and there are more than five months to go. Only three were banned in all of 2022.

I am not sure if banning a commenter who appears to be a troll is the best practice. You may be playing right into their game by working to get banned then claiming you shut down their speech. I know it is your blog but no one has to respond to morons. I learned a long time ago not to respond to those who resort to snide attacks on me instead of legitimate discussion or debate.

One of the primary tools of the left is to make an accusation and then force you to defend yourself. Nothing in TT’s comment provided any evidence that his assertion was factual. We must stop being defensive and go on the offensive – make them prove their allegations. Their goal is to make you respond using up your time in doing so. Make them do the work. Make them make their case using actual comparative evidence. Let them waste their time and not yours.

It’s the best practice for what EA is here to do. The quality of the comments vastly enhances and supplements the value of the posts themselves. I have (so far) made 0ver 61,000 comments in exchanges with commenters. These are also designed (well, most of them) to stimulate discussion. Trolls and assholes just make the experience of working on the blog unpleasant as well as burdensome for me, and less positive for serious, intelligent and good faith participants as well. I’d rather have 10 substantive comments on a post than 100 snarks, gags, and insults.

I ban commenters only after several offenses and usually after warnings. They can claim anything they want afterwards—free speech, you know.

I didn’t think Tommy’s prior comments were that snotty. They WERE standard left-wing talking points, and I was going to respond to at least one of them to get his full measure. I understand you banning him for this cheap shot, but I noticed you didn’t object to his other comments at the time.

Sure I did. I just gave him a chance to settle down as well as the benefit of the doubt. His comment about my experience with the CLE provider was deliberately obnoxious and a tell, but I let it go with a nice, moderate response. Let me remind you: that post was about how I complimented live, in person participants in a seminar that was also streamed on Zoom, explaining, 100% accurately, that they were getting a more valuable CLE experience. TT wrote back in part,

“Let this be a learning experience for you: if you denigrate the service you are offering as you are offering it, people will be less likely to pay you for that service. Even if you think you have been given inadequate tools to perform that service equally for all patrons, it is still your responsibility to do your best with the tools you are given. You seem to understand this when it comes to people providing a service to you, but when you are the one providing the service, you think it’s your job to throw veiled criticism at both your customers and your employers. If you wouldn’t accept this from a tech help or a CVS cashier, I’m not sure why you think others should accept it from you.”

1. Snotty and presumptuous: don’t tell me how to do my business.

2. I did NOT denigrate “my service”

3. I don’t THINK Zoom is inferior to live training, it IS inferior, and everybody knows it.

4. I always do my best with whatever the situation is—that’s my theater training. If doesn’t mean that those who do an inadequate job supporting me should not be so informed.

5. His references to earlier posts were also cheap shots, and not similar in any way to the situation discussed in the post.

As I say, I gave him the benefit of the doubt, while strongly suspecting that his purpose was attack me personally. And my suspicions were born out. Yes, he was not “warned,” and in a few cases, commenters don’t just cross a line but obliterate it have been banned based on signature significance.

I missed his comment on the CLE incident. I thought the Brown University post was where he first came in.

I fully understand your point. It is not your job to give them a forum. My concern is that trolls waste everyone’s time by responding to them which is what they want. I would just say “prove it” and let them put up or shut up. No one needs to respond to a troll after you demand proof. That was my only suggestion.

It is quite sad that maladjusted individuals don’t understand that attempting to dim someone else’s light does not make theirs shine brighter. The exact opposite occurs. While I don’t think they should be coddled or encouraged I do feel sorry for them. Paradoxically shunning and withholding the attention they crave is the best way to deal with their disruptive behavior.

Indeed.