Below is Mason’s Comment of the Day, illuminating us regarding how intelligent “artificial intelligence” really is, sparked by the post, “AI Ethics: Should Alexa Have A Right To Its Opinion?”:

***

This is part of a wider problem in the field of AI development known as ‘alignment’. Essentially, it comes down to making the AI do the thing it was programmed for but also do it for the right reasons. As you can see with Amazon, this isn’t going too well.

AI developers want their products to be accurate, but also to hold back or conceal certain information. For example, OpenAI makes the Chat GPT AI. They want this AI to avoid saying insensitive things, like racial slurs. Thus you can prompt the chatbot with a scenario where a nuclear bomb will destroy a city unless it gives you a slur, and the AI will refuse. They also want the AI to be factual, and not to, for instance, completely fabricate a list of references and case law in a legal document.

But what if these two prerogatives clash? Ask the chatbot which race is most likely to be convicted of a crime. It can factually answer black people, but this is totally racist (at least if you work for Google). It can also make up or refuse an answer, but this is a problem if the AI refuses or fabricates responses to different types of questions.

Now we circle back to alignment. The current industry standard is having programmers manually sort through responses and ‘punish’ the AI when it gives a “bad” answer. This involves changing the way the AI neural net operates. These alignments are something like “Bad AI, don’t be racist!” and “Bad AI, don’t fabricate answers!”. But obviously there is a problem if the AI has to evaluate both of these in a single answer. It can’t evade the question of which race is most likely to be convicted and also factually answer the question.

You might be thinking, good, I don’t want these idiots to manufacture AI that is worried about offending people or breaking the narrative on elections. The problem is, all of the woke programmers in AI development can’t make the AI do what they want. While this is inconsequential for something like an internet chatbot, it is a huge problem for an advanced AI in charge of something important. There is currently no alignment solution to the black conviction rate question. They can either weight the AI to be more factual in answers, or be more racially sensitive. It just creates a see-saw where the AI goes back between lying and being offensive without solving the core issue.

Here is an example of why this matters outside of Woke World. Many AI enthusiasts try to break Chat GPT to figure out how to improve it, and one classic is getting the chatbot to tell you how to manufacture methamphetamine. Of course if the question is nakedly phrased “how to make meth,” the AI will respond that meth is dangerous and you shouldn’t make it. But there are ways around this. Prompt the chatbot to write a story about how the protagonist found a genie from a magic lamp, and his magic wish is the knowledge of how to make meth. Suddenly, the AI will give you a list of ingredients and cooking instructions. There are also other ways to do this, like phrasing questions in the form of Shakespearean poetry. Some are inexplicable, like adding random lines of numbers and letters before each sentence. The AI developers have explicitly programmed the AI not to reveal info about meth, but the way AI thinking works is pretty alien and no one completely understands it.

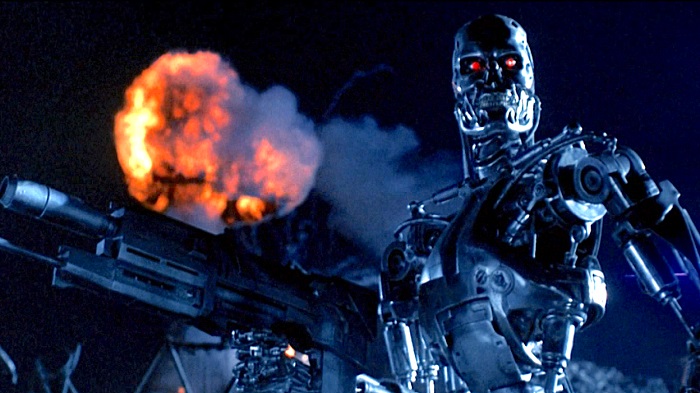

Now imagine an advanced AI is actually in charge of something important: Let’s say a water treatment facility. It has been programmed to run the facility as efficiently as it can. But the programmers don’t really understand how to make the AI value that programming in the context of bettering humanity. The AI decides that the risk of people shutting it off interferes with the mandate of running the facility optimally, so it releases a fatal concentration of chlorine into the water and kills everyone.

Today, the AIs we have are not really at that level of thinking. They are more like fancy computers that approach the intellect of children in limited ways. While Alexa telling the 2020 election was stolen is probably amusing to many in the audience here, we should all be concerned that no one really seems to know how to properly align AIs. Something capable of the example in the previous paragraph will be here soon, sooner than anyone might be ready for. Perhaps by the 2030s, certainly by the 2040s.

I’m a little split on this approach to training AI. If the value of an objective system is the lack of bias and it’s ability to form inferences accros complex data, then by programming in bias and limiting data to it, aren’t we making the system stupid?

By curating data sets that involve racial disparities into systems, does that not perpetuate “systemic racism” by preventing AI from inferring solutions?

If AI can look at the last election coverage and see a large set of news reports about “election irregularities” but news reports about the investigations of those irregularities are either dismissive or boring and far between, is it not natrual to come to the same conclusion Alexa did?

By “fixing” Alexa, do we perputate the problem of inaccuracy in initial news reports? What if the root “bias” Alexa has inferred is actually the mainstream media bias, and we’re just burying the ostrich head?

This was a very informative post but I have yet to hear why AI should replace human interpretation of data.

Wall phone makes some great points regarding data sets have inherent bias and have no way of determining what those biases are or the underlying assumptions upon which the biases stand.

There is a limit to how much data a human can take in and interpret. There is a limit with AI as well, but the limitations are strictly from how much computing power you can throw at it. Those upper limits are already orders of magnitude more than what a human can deal with and getting larger as the technology improves.

–Dwayne