This is one of those relatively rare emerging ethics issues that I’m not foolhardy enough to reach conclusions about right away, because ethics itself is in a state of flux, as is the related law. All I’m going to do now is begin pointing out the problems that are going to have to be solved eventually…or not.

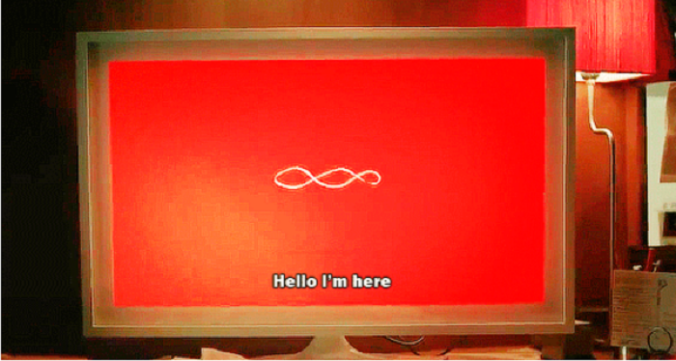

Of course, the problem is technology. As devotees of the uneven Netflix series “Black Mirror” know well, technology opens up as many ethically disturbing unanticipated (or intentional) consequences as it does societal enhancements and benefits. Now we are all facing a really creepy one: the artificial intelligence-driven virtual friend. Or companion. Or lover. Or enemy.

This has been brought into special focus because of an emerging legal controversy. OpenAI, the creators of ChatGPT, debuted a seductive version of the voice assistant last week that sounds suspiciously like actress Scarlett Johansson. What a coinkydink! The voice, dubbed “Sky” evoked the A.I. assistant with whom the lonely divorcé Theodore Twombly (Joaquin Phoenix) falls in love with in the 2013 Spike Jonze movie, “Her,” and that voice was performed by…Scarlett Johansson.

Johansson says that the company asked to license her voice for “Sky,” and she refused, twice, but nevertheless, Sky’s voice sounds “eerily similar to mine.” Her lawyer told OpenAI to stop using “Sky,” or else.

OpenAI suspended its release of “Sky,” but said in a blog post on Sunday that “AI voices should not deliberately mimic a celebrity’s distinctive voice — Sky’s voice is not an imitation of Scarlett Johansson but belongs to a different professional actress using her own natural speaking voice.”

There’s the first question! When is sounding a lot like a celebrity sounding too much like a celebrity? This issue sort of arose when celebrity impressionists were deliberately used on radio ads to imply that the actual celebrity endorsed a product. Eventually a disclaimer became mandatory. However, if an actress, using her natural voice, sounds like a more famous actress, is her voice work inherently deceptive and unethical? And what is an actress’s “natural” voice anyway?

In this case, it is fair to say, OpenAI deliberately was trying to have “Sky” sound like Scarlett, so its statement was disingenuous.

The larger and more complicated question is whether deliberately making non-humans appear to be sufficiently human to engender an emotional response, such as trust, respect, affection, lust, even love, is unethical or just icky, as in “creepy as hell.” I’ve made my own personal call, and it’s not changing: I wanted no part of Siri, and I want no part of “Sky” or the other ChatGPT personas (“Breeze,” “Cove,” “Ember,” and “Juniper”—why do they all sound like strippers?). I especially feel that way now, when my newly thrust-upon me personal situation resembles that of Theodore Twombly. It might be tempting, indeed addictive, to have a ChatGPT that sounds like Grace around the house, letting me discuss movies, current events, business, the Red Sox and my anxieties with someone more verbal than my son and more knowledgeable than my dog. I am adamant, however, in my conviction, as king Lear said, “That way madness lies.”

Such addictions are another step down the slippery slope described by the Unabomber, which I witness every day when I see people walking along on beautiful Northern Virginia mornings with flowers everywhere around them, staring at their cell phone screens, ignoring other people, neighbors, their dogs and sometimes the cars they step in front of, like mine.

I believe that this trend is destructive to society and humanity, and I won’t participate in it or tempt myself to be sucked into it. Maybe resistance is futile. Maybe pushing vulnerable human beings into facile relationships with machines because real relationships are too unpredictable and painful isn’t wrong, just new—at this point, that can’t be answered definitively. As with the cloning of dinosaurs in “Jurassic Park” (and didn’t that work out well) there is no question that these fake humans will be created and will be made available Maybe it won’t result in disaster, but if it doesn’t that will be moral luck.

I believe the ethical action now is to refuse to join the parade.

In a very disappointing essay that accompanies the Johansson story in today’s Times, Alissa Wilkinson claimed to be answering the question, “What We Lose When ChatGPT Sounds Like Scarlett Johansson.” She didn’t deliver on that tease at all: Hey, maybe this wasn’t really the Times movie critic, but an AI chatbot that writes like her! Here was her entire “answer” to the implied question she posed, in the final paragraph of a long piece:

But if the point of living lies in relationships with other people, then it’s hard to think of A.I. assistants that imitate humans without nervousness. I don’t think they’re going to solve the loneliness epidemic at all. During the presentation, [Mira Murati, OpenAI’s chief technology officer] said several times that the idea was to “reduce friction” in users’ “collaboration” with ChatGPT. But maybe the heat that comes from friction is what keeps us human.

We lose our freedom from nervousness? Gee, a chatbot could have come up with something more perceptive than that.

This one is clear to me.

I’ve been following this story since it came out and, based on the information I’ve read and heard from multiple sources, I think OpenAI had a goal of using Scarlett Johansson’s voice for “Sky” and they unethically rationalized how they accomplished their goal after Johansson turned them down. In the process of obtaining their goal, OpenAI crossed over a legal boundary and very intentionally violated Scarlett Johansson’s rights. If this goes to court the court should rule in favor of Scarlett Johansson’s and make a public example out of OpenAI by clobbering them with a huge dollar settlement thus creating some kind of common law that can be used in cases where companies intentionally violate the individual rights of famous people like this.

I’m anti-AI right now and I’ll likely remain that way until I see some serious legal protections limiting what AI can do.

I think Wilkinson has got something backwards. Relationships with real people prompt nervousness in a way that interaction with AI doesn’t, because a real person has feelings that can be hurt and goals that might conflict with one’s own goals. We have to attend to the needs and wants of real people in ways that we don’t have to with AI, and navigate sensitive situations to try and figure out how, against all odds, to help people find a path forward that they feel good about.

That all can be quite stressful, but it’s also something that we can’t afford to lose, both because we all need the help of real people at some point or other and because it would be a huge step backwards for humanity both collectively and individually. The skill of helping other people is part of what enables humanity to be so constructive, to collaborate to accomplish more together than the sum of what they could accomplish individually. Losing it would be like losing mathematics, or language, or imagination, or the ability to use tools.

That said, I have nothing against using AI to help train humans on how to use empathy, just so long as it doesn’t distort their calibration. It’s not fundamentally different from listening to fictional stories, acting out imaginary scenarios, or even using scripted interactive software. As long as they understand the difference between a real person with desires and an algorithm simulating the responses of a person, AI can be a useful tool. It just can’t be a permanent substitute.

If you haven’t watched “Black Mirror,” you should, EC. In fact, I’ll offer this: if you write an ethics review of any episode, I’ll post it.

I recommend skipping the very first episode. Not because it’s bad, but it’s icky enough that it put me off the series for a long time.

Is that the “have sex with the pig” episode? Still a great ethics hypothetical….but yes, icky.

Yup, that’s the one.

I just finished watching the first episode and it reminded me a little bit of the movie “Inception”. Is Black Mirror the 21st century version of “The Twilight Zone”?

In a word, yes, though narrower in scope.

To be clear as to which episode I watched first, it was Season 6 Episode 1 that I watched first. For some unknown reason Netflix started with that episode when I clicked on the link.

Thanks! I may very well take you up on that. They’ve got Netflix at the Red Cross donation center, and apparently some of the episodes of Black Mirror involve simulating people’s consciousnesses, which raises interesting questions about the difference between a person and a software tool. I’m looking forward to answering those.

I hope so. It’s a serious proposal.

Unfortunately I watched the entire season 6 of the Black Mirror series before noticing that Netflix didn’t start me watching the series at the beginning. Arrgh!

I’ve watched a total of 18 episodes of the series and one of the first things that jumped out at me is that the writers and producers of the series seem to be obsessed with using electric vehicles and show viewers how technology can be addictive and/or thoroughly abused.

There are a couple of really weird episodes and a couple of really boring episodes. So far, all the episodes are a bit off the wall.

Off topic for just a moment.

EC,

You should look up groups in your area like Wisconsin Alliance for Civic Trust, I think you could have useful input into what these groups are trying to accomplish.

Thanks

Steve

Now back to our regularly scheduled commentary.

Thanks Steve, much appreciated! I’ll reach out to this organization and the ones from the linked resources. They seem like they may be ideal partners.

This reminds me a an episode of Futurama. In the future, people can have robot clones of famous celebrities to be there boyfriend/girlfriend (Season 3, Episode 15: I dated a robot). About halfway through you find they were taking against the celebrities’ will to the point it actually caused them pain.

I don’t think that is going to happen here, but I think some of the similar things can apply. They even had an infomercial in the cartoon about how people will miss out on life by spending all there time with something that is never going to give them what they need. Might be worth checking out.

You say that now, but I get enough spam calls from essentially slaves in a call center that barely sound human that I’d welcome having them come from a better sounding AI.

On a serious note, making AI that sounds like humans is both unethical (deceiving) and shortsighted. We could have distinctly sounding AIs that could become famous for their voices. Think of the guy playing HAL in 2001. He mad an intentionally robotic voice as the computer. Or KITT, sounded clearly like a robot, but also entertaining and sarcastic. I have stories about Cortana (AI from the Halo videogame) that I cannot share due to an NDA, but some of the discussed approaches to hit the robot/human line were intriguing.

I do not want robot voices that sound like humans. I want robot voices that sound like robots and become recognizable on their own terms.

A variation on a theme: I have a number of friends who do voiceover work. One in particular was just building demand (he has a distinctive bass voice and knows how to read a line) when suddenly he wasn’t getting as many gigs. Turns out that some AI outfit somewhere in Eastern Europe had access to enough things he’d done that they could, and did, duplicate his voice.

Unlike Scarlett Johanson, he’s not famous, and whereas if I hear something he’s done, I know immediately that it’s him, the average person wouldn’t recognize his voice. Of course, he also wouldn’t be likely to successfully sue some AI firm in Ukraine or Azerbaijan or Belarus or wherever.

Another friend who has a very successful career as a voice actor (voicing cartoon characters and video games, that sort of thing) hasn’t been affected… yet. But I suspect her reckoning is coming soon.

It’s troubling, but I don’t see us putting that toothpaste back in the tube.